Well, I was thinking, you know, that we should do some penal reform around here. I mean, we can't have vendenpatria sitting in their own houses, doing their usual jobs, at the expense of the State, and a joint and a line or two of coke every now again, can we?

So I had aqn idea. We make a NEW SAN PEDRO down around San José de Uchupiamonas, and we make it a proper prison. So one man, or pretty young lady, to a cell, which contains only a bed, and you each get an Amazon Kindle, and the Government decides what appears on it. At the cell is a Faraday cage, so it's radio quiet, and you get one visitor a week, for half an hour, and he or she must agree to wear a radio tag and to total surveillance of his or her communications, and must not leave the country, etc., etc. And you can talk to him or her for half an hour, through a speaker and microphone, with a guard standing half a meter behind of each of you, and the whole conversation will be recorded, and analysed in detail, which is why we can only let you have half an hour, sorry. And you will grow your own food and learn interestinmg crafts, so it won't be all that bad, will it, for you? I don't know how much your friends and family are going to be able to put up with though, do you?

Sorry, I'm just cheesed off that I have only four students who're too shit-scared to talk to me. So I think I'll go and watch BTV for a few hours, and I hope whilst I do that someone brings back my "medicine" bag of coca with the packet of cigarettes and the lighter, you can keep the notes, I've got that stuff, and I don't mind starving, but I get really fucking grumpy whjen I haven't got cigarettes or coca. And, well Bolivias TV is great, so I'll watch that for a few hours, smoke a few cigarettes, and calm down, and hopefully when I come back here I will have a shit-load of e-mail, and someone will have apologized to my family for treating them like total shit, and someone will go along to migracion and sort out my PT1 for next week, and then everyone will be happy, won't we! I know I will, I don't fancy having to spend months every year lecturing political prisoners in a re-education programme in the tropis, I mean, it would be like Cambodia, wouldm't it? But still, San José is a nice place, and I have a lot of friends down there. And maybe someone will have sent all I've written in the past six years to the President, right fucking now, so that I see and hear something very, very stronglñy positive to the effect that h3e knows what is going on and has someone on the case.

That would be nice, because then we need never hear about any of this ridiculous farago again, and we can get on the process of saving the life of the Pachamama, which is important, because when she goes you people will noit kow you're insane, it'll be like a slughter-fest in a lunatic asylum, all over the world. That's not good.

Buck up your ideas, and never, ever fuck with me again, I hope you understand that.

Yours sincerely

Ian Alan Neil Grant

C.I. E0033311

.

domingo, 19 de julio de 2015

sábado, 18 de julio de 2015

Bootstrapping Secure Global Communications

The best possible cryptographic keys are random shared secrets, used as one time pads. This is because if the pads are random and if each block of data is never used twice, then there is by definition no logical connection between the cypher-text and the plain-text. This in turn is because, if the probability of any given bit of the pad being set is 50%, then each bit of the cyphertext has a 50% probability of being a genuine bit, or having been flipped. Therefore an attacker who has possession of only the cyphertext has no more information about the plaintext than he would expect to obtain by guessing, or flipping a "fair coin". So the cyphertext is effectively random, as far as he is concerned, and therefore so is the plaintext.

The problem with-one time pads is that the pads must be kept secret by both parties for at least the full lifetime of any cyphertext they were used to encode, and if they are to be genuinely one-time then they will only allow a finite number of bits to be securely transmitted.

We can solve the first problem by keeping the one time pads secret forever. And we can solve the second problem once we recognise what we really mean by the term random, which is another way of saying that there is no knowable reason why any particular bit of the pad should be either 0 or 1. And so what is random information and what is not depends upon what we know, and not on the information itself. This is the case with the random numbers chosen for one-time pads: from the point of view of the communicating agents the pads are not random, but from the point of view of someone not in possession of the information, they are random.

It follows then that provided we assume the system is indeed secure to start with, then we can use the initial one-time pad to encrypt the exchange of further pad data for subsequent messages, provided we are careful to ensure that the attacker never has a crib, which is a piece of data which he knows is probably a part of the plaintext. The possibility of a crib arises because of the symmetry, from the point of view of the external observer, between a random pad and plaintext. We can avoid the possibility of cribs fairly straightfowardly by using a channel protocol which allows the sender to insert messages into the stream at random, which messages instruct the receiver to alter the encryption algorithm to be used for subsequent messages. If the alterations to the algorithm are in the form of fragments of executable program code, which describe mathematical functions which can be automatically composed in some way with the existing encryption that is in effect, then the attacker will not have any plausible cribs, because he would have no reason to suppose any one program fragment is any more likely than any other. We may then reason circularly, that our initial assumption that the system is indeed secure, holds good, and consequently that we can continue to use it to securely transmit all further one-time pads over the same channel, ad infinitum.

The problem is thus reduced to just that of securing the initial exchange of one-time pads. It may at first seem that secure pad exchange could never be achieved without some direct communication between the two parties, so that they can be certain they are not communicating through an unknown man-in-the-middle who has access to one or both of the initial one-time pads, and who can therefore read all the messages they exchange on that channel. The problem of pad exchange is not a problem of security however, but rather one of identity. And again, we can solve it by thinking carefully about what we really mean by the term identity.

Contrary to popular opinion, somone's identity is not "who they really are," nor even "who they know they are." Such definitions of identity are practically unverifiable, because they don't tell us anything at all about the individual concerned. If you are in La Paz, Bolivia, for example, you probably cannot get a new UK passport, by sending a letter to the British Embassy in Washington D.C. and declaring "I'm me!" They need to know who you are before they can identify you as the one-time holder of British Passport No. 307906487. So identity is not a matter of who someone really is, it is a matter of what everyone else knows about that person, in other words, it is common knowledge. So in order to establish the identity of someone with whom we wish to communicate, all we need is to establish sufficient common knowledge to convince ourselves that this person with whom we are in communication is indeed the same person of whom we share some of the common knowledge. You may well wonder then why we need identity documents to serve as so-called credentials, and the answer is simply that we don't, and the proof is that identity documents can and often are forged, but not even the CIA can forge common knowledge. If this sounds a bit puzzling, then don't worry, you're in good company: Bertrand Russell didn't seem to understand it either. But you, dear reader, can do better, just by thinking over the problem for a while and discussing it with your friends in a cooperative and inquiring manner. Thus, as we found was the case in the notion of randomness, the notion of identity too is a matter, not of fact, but of the knowledge others may or may not have.

Since we are intending to use our knowledge of the identity of the person with whom we are communicating to establish a secure communications channel, we can once again assume that the channel is indeed secure, and on the basis of this assumption, we can use that same channel to establish beyond any reasonable doubt that the person with whom we are communicating is indeed the person of whom we share some of the common knowledge. Then we proceed on the basis of directly shared common knowledge, in the form of biometric information, such as photographs, fingerprints, iris patterns, spoken voice recordings and DNA samples, combined with shared personal knowledge of life history, which may include such details as "one-time holder of British Passport No. 307906487", or it may not.

Having thus established sufficient direct, i.e. biometric, knowledge of one another's identity, we can then fairly straight-forwardly extend this throughout the whole network, using shared multi-party communications. The principle is that we pass the biometric information -- information representing the direct knowledge people have of other people's bodies and brains -- around the network, so that any path on the resulting graph will be a chain of trust extending from the direct knowledge individuals have of one another, and passing via other chains of trust, to form what mathematicians call the transitive closure of the trust relation, which is ultimately founded on individual direct knowledge particular people have about each other.

Then what we mean by Identity-with-a-capital-I, amounts to the common knowledge which the whole of Humanity has, as to the essential identity of those individuals. This essential identity that individuals have in the common knowledge as a whole is strictly more than just their physical or bodily identity, or indeed their biographical and genealogical identities -- all of which are accidents of their being --- and which inevitably change with the passage of time: their Identity within the whole of common knowledge is their higher eternal identity of mind.

During this process of sharing of the information we have about people, we hope not to have to rely on any single "authoritative" source of information as to an individual's identity, because that would be no better than a passport. We need to find an algorithm which can somehow quantify the trustworthiness of any statement as to the identity of any individual, from the point of view of any other, in terms of the number of independent sources available, and the degree to which those sources corroborate each other. One possible method might be to include only the sources amongst which there is no disagreement whatsoever, and assign a score of one to each of them, but that might not be sufficient to establish the web initially, and it may be necessary to allow degrees of disagreement to be accounted for by adjusting the weights of evidence arriving via the different chains. Whatever is the particular algorithm chosen, it should be computed in a distributed manner, so that as any individual's identity information floods through the network, the calculation of the quantitative degree of trust is carried out at each node. Perhaps we could ask the people at Google how to do this sort of thing?

An indicator of the feasibility of establishing a transitive web of trust is the notion sometimes called "six degrees of separation". A similar notion is evident in "The Kevin Bacon Game", in which people are given the name of an actor or actress, and have to try to find the shortest chain of actors who have appeared together in films, and which connect that person with Kevin Bacon. But there are a great many different ways, apart from acting together in movies, in which different people may be connected to one another in common knowledge. So when we combine all possible connections, we may find that the "degree of separation" is in fact closer to one than to six. In other words, as we include more and more genealogical and biographical information about ourselves in the system, we will surely find that we share many, many more connections between ourselves (not to mention Kevin Bacon) than were ever immediately apparent.

The problem with-one time pads is that the pads must be kept secret by both parties for at least the full lifetime of any cyphertext they were used to encode, and if they are to be genuinely one-time then they will only allow a finite number of bits to be securely transmitted.

We can solve the first problem by keeping the one time pads secret forever. And we can solve the second problem once we recognise what we really mean by the term random, which is another way of saying that there is no knowable reason why any particular bit of the pad should be either 0 or 1. And so what is random information and what is not depends upon what we know, and not on the information itself. This is the case with the random numbers chosen for one-time pads: from the point of view of the communicating agents the pads are not random, but from the point of view of someone not in possession of the information, they are random.

It follows then that provided we assume the system is indeed secure to start with, then we can use the initial one-time pad to encrypt the exchange of further pad data for subsequent messages, provided we are careful to ensure that the attacker never has a crib, which is a piece of data which he knows is probably a part of the plaintext. The possibility of a crib arises because of the symmetry, from the point of view of the external observer, between a random pad and plaintext. We can avoid the possibility of cribs fairly straightfowardly by using a channel protocol which allows the sender to insert messages into the stream at random, which messages instruct the receiver to alter the encryption algorithm to be used for subsequent messages. If the alterations to the algorithm are in the form of fragments of executable program code, which describe mathematical functions which can be automatically composed in some way with the existing encryption that is in effect, then the attacker will not have any plausible cribs, because he would have no reason to suppose any one program fragment is any more likely than any other. We may then reason circularly, that our initial assumption that the system is indeed secure, holds good, and consequently that we can continue to use it to securely transmit all further one-time pads over the same channel, ad infinitum.

The problem is thus reduced to just that of securing the initial exchange of one-time pads. It may at first seem that secure pad exchange could never be achieved without some direct communication between the two parties, so that they can be certain they are not communicating through an unknown man-in-the-middle who has access to one or both of the initial one-time pads, and who can therefore read all the messages they exchange on that channel. The problem of pad exchange is not a problem of security however, but rather one of identity. And again, we can solve it by thinking carefully about what we really mean by the term identity.

Contrary to popular opinion, somone's identity is not "who they really are," nor even "who they know they are." Such definitions of identity are practically unverifiable, because they don't tell us anything at all about the individual concerned. If you are in La Paz, Bolivia, for example, you probably cannot get a new UK passport, by sending a letter to the British Embassy in Washington D.C. and declaring "I'm me!" They need to know who you are before they can identify you as the one-time holder of British Passport No. 307906487. So identity is not a matter of who someone really is, it is a matter of what everyone else knows about that person, in other words, it is common knowledge. So in order to establish the identity of someone with whom we wish to communicate, all we need is to establish sufficient common knowledge to convince ourselves that this person with whom we are in communication is indeed the same person of whom we share some of the common knowledge. You may well wonder then why we need identity documents to serve as so-called credentials, and the answer is simply that we don't, and the proof is that identity documents can and often are forged, but not even the CIA can forge common knowledge. If this sounds a bit puzzling, then don't worry, you're in good company: Bertrand Russell didn't seem to understand it either. But you, dear reader, can do better, just by thinking over the problem for a while and discussing it with your friends in a cooperative and inquiring manner. Thus, as we found was the case in the notion of randomness, the notion of identity too is a matter, not of fact, but of the knowledge others may or may not have.

Since we are intending to use our knowledge of the identity of the person with whom we are communicating to establish a secure communications channel, we can once again assume that the channel is indeed secure, and on the basis of this assumption, we can use that same channel to establish beyond any reasonable doubt that the person with whom we are communicating is indeed the person of whom we share some of the common knowledge. Then we proceed on the basis of directly shared common knowledge, in the form of biometric information, such as photographs, fingerprints, iris patterns, spoken voice recordings and DNA samples, combined with shared personal knowledge of life history, which may include such details as "one-time holder of British Passport No. 307906487", or it may not.

Having thus established sufficient direct, i.e. biometric, knowledge of one another's identity, we can then fairly straight-forwardly extend this throughout the whole network, using shared multi-party communications. The principle is that we pass the biometric information -- information representing the direct knowledge people have of other people's bodies and brains -- around the network, so that any path on the resulting graph will be a chain of trust extending from the direct knowledge individuals have of one another, and passing via other chains of trust, to form what mathematicians call the transitive closure of the trust relation, which is ultimately founded on individual direct knowledge particular people have about each other.

Then what we mean by Identity-with-a-capital-I, amounts to the common knowledge which the whole of Humanity has, as to the essential identity of those individuals. This essential identity that individuals have in the common knowledge as a whole is strictly more than just their physical or bodily identity, or indeed their biographical and genealogical identities -- all of which are accidents of their being --- and which inevitably change with the passage of time: their Identity within the whole of common knowledge is their higher eternal identity of mind.

During this process of sharing of the information we have about people, we hope not to have to rely on any single "authoritative" source of information as to an individual's identity, because that would be no better than a passport. We need to find an algorithm which can somehow quantify the trustworthiness of any statement as to the identity of any individual, from the point of view of any other, in terms of the number of independent sources available, and the degree to which those sources corroborate each other. One possible method might be to include only the sources amongst which there is no disagreement whatsoever, and assign a score of one to each of them, but that might not be sufficient to establish the web initially, and it may be necessary to allow degrees of disagreement to be accounted for by adjusting the weights of evidence arriving via the different chains. Whatever is the particular algorithm chosen, it should be computed in a distributed manner, so that as any individual's identity information floods through the network, the calculation of the quantitative degree of trust is carried out at each node. Perhaps we could ask the people at Google how to do this sort of thing?

An indicator of the feasibility of establishing a transitive web of trust is the notion sometimes called "six degrees of separation". A similar notion is evident in "The Kevin Bacon Game", in which people are given the name of an actor or actress, and have to try to find the shortest chain of actors who have appeared together in films, and which connect that person with Kevin Bacon. But there are a great many different ways, apart from acting together in movies, in which different people may be connected to one another in common knowledge. So when we combine all possible connections, we may find that the "degree of separation" is in fact closer to one than to six. In other words, as we include more and more genealogical and biographical information about ourselves in the system, we will surely find that we share many, many more connections between ourselves (not to mention Kevin Bacon) than were ever immediately apparent.

The final flourish, will be to provide a "launchpad" whereby individuals could prime certain "addresses" to accept one or more programs from one or more different types of communications media, such as SMS messages, Web Server URLs, IP Datagrams, USB flash memory devices, MicroSD cards attached to the legs of migrating Canada Geese, or scanned pieces of paper recovered from floating bottles, and run those programs, passing the output of one to the input of another. As well as exchanging the initial one-time pad and identity credentials, each pair of people authenticating would exchange, let's say six, primes, which are each sets of two 10 digit random addresses, and a 10 digit random pad. If each person then authenticated with another six others, then each would have six possible nodes to which they could send programs, and which programs could trigger the sending of further messages, either directly, or indirectly via other primes, depending on the particular programs actually sent. These prime sheets would not be transmitted electronically, in the first-stage bootstrap at least, they would be little pieces of hand-written paper, and the bearer would record separately to which physical address and protocol any sheet of primes corresponds.

The purpose of the primes is to provide an asynchronous boot process, so that the initial message exchange that triggers, say, reading of a one-time pad by the receiver, is not correlated in any externally observable way, with the actions of the sender of that message, to whom that pad in fact corresponds. The same mechanism also allows us to keep the initial pads a secret for ever, because we can arrange for the actual pads that are used to be hybrids of the pads we know, based on the order in which the initial messages just happen to arrive at their destinations, and then we ourselves could not at any point actually know that order, any better than a would-be attacker could. And if we don't actually know the secret, then we can't "spill the beans," even under extreme duress.

The reason we suggest n primes shared between each of n peers is to make possible the random exchange of primes to defeat an attacker who can observe all the parties engaging in pad-exchanges and thereby identify all the potential participants. Provided he cannot observe which primes are swapped during authentication, he will not be able to correlate the transmission and reception of the first step of each initial message transmission.

It should be clear that what we have described above is just one possible way to start a network. One would not expect to be able to have complete confidence in the result, especially given the impossibility of actually knowing that the network has not actually been compromised. So one should expect to have to iterate the process, using several independent first-stage networks as transport media to bootstrap a second-stage. Then proceed to a third-stage from several independent second-stage networks, etc. etc.

The reason we suggest n primes shared between each of n peers is to make possible the random exchange of primes to defeat an attacker who can observe all the parties engaging in pad-exchanges and thereby identify all the potential participants. Provided he cannot observe which primes are swapped during authentication, he will not be able to correlate the transmission and reception of the first step of each initial message transmission.

It should be clear that what we have described above is just one possible way to start a network. One would not expect to be able to have complete confidence in the result, especially given the impossibility of actually knowing that the network has not actually been compromised. So one should expect to have to iterate the process, using several independent first-stage networks as transport media to bootstrap a second-stage. Then proceed to a third-stage from several independent second-stage networks, etc. etc.

I hope this is not too hairy. I think it is just about possible for someone to get the gist of it from prose alone, without using diagrams or notes. Ed Dijkstra recommended this as a good way of making sure an algorithm isn't too complicated. I hope he's right!

So much for the theory, then. That's just to get the academics off our backs and buy ourselves a bit of breathing space. Don't wait for them to unanimously agree that it's a Good Thing, they never will. Make the most of it by just getting on with building it. Actual security is not theoretical, it's practical, and it's not a matter of fact, it's a matter of knowledge. I say "it", but I mean them. Don't just write millions of lines of technical bit-twiddling code implementing one huge rat's nest of networking wire, like ARPANET, then wait for it to be hacked, because it will be hacked. In fact, try not to write any code at all. Certainly, you should not be exchanging code. You should exchange only formal definitions, and you should independently develop the absolute minimum of manually-written code you need to automatically translate those formal definitions into actual working, interpretable or compilable computer languages.

All we need are a few neat and simple bytecode language definitions, and a few languages like node.js for writing low-level interpreters.

For the languages, look at Reynold's "Definitional Interpreters". Write translators which parse those interpreters and JIT compile lightning or LLVM IL implementations: both of these support tail-recursion, and will easily interpret Reynold's interpreter IV. Then write a term-rewriting system in that, look at PLT redex to get some ideas of how you can make a system with completely configurable semantics. If you've got the guts, look at System F and proofs and Types, but as soon as you think you're about to go nuts, put it down and write some concrete code. But not too much, just for therapy, you know! Try writing a Reynolds-style interpreter for System F, and compile it into lightning or LLVM, or something else. If that works, try defining lightning as system F primitives of "atomic" type. For parsers, look at Tom Ridge's P3 and P4 parsers: they will be easy to write in System F and Reynold's III/IV and V.

For the interpreters, look at the ML Kit, which, in the guise of smltojs, lets you write browser JavaScript in a really nice typed functional language, and will be fairly easy to coerce into writing neat little JavaScript server apps in ML. I wouldn't bother with SMLServer though, at least while it needs Apache to run.

And while you do that, exchange primes, under many and various schemes, at every available opportunity. Make friends with really weird people and set up networks with them. Don't think of networks as precious. Play around with them, try to break them deliberately, and report only the negative results publicly. We don't need another OpenBSD honeypot. Don't be afraid to set up "spare" networks, and "spoof" networks and redundant duplicates. The more smoke around the sooner the little girtls at the NSA and GCHQ will say "Fuck it, let's just join in." And don't turn them away. You need secure communications with your enemy much more than you need them with your friends. You are not going to start a war with with your friends, and create a new extremist religious state, over a silly little misunderstanding, are you?

Read about X.500 directory server. Directories are vital, and having a good formal description from which you can automatically generate implementations in any language will make the higher-level functions like mail and instant-messaging, and distributed JavaScript RAID disk block loops running on Chrome browsers easier to configure remotely.

The three laws of metarobotics are

And it's fun!

So much for the theory, then. That's just to get the academics off our backs and buy ourselves a bit of breathing space. Don't wait for them to unanimously agree that it's a Good Thing, they never will. Make the most of it by just getting on with building it. Actual security is not theoretical, it's practical, and it's not a matter of fact, it's a matter of knowledge. I say "it", but I mean them. Don't just write millions of lines of technical bit-twiddling code implementing one huge rat's nest of networking wire, like ARPANET, then wait for it to be hacked, because it will be hacked. In fact, try not to write any code at all. Certainly, you should not be exchanging code. You should exchange only formal definitions, and you should independently develop the absolute minimum of manually-written code you need to automatically translate those formal definitions into actual working, interpretable or compilable computer languages.

All we need are a few neat and simple bytecode language definitions, and a few languages like node.js for writing low-level interpreters.

For the languages, look at Reynold's "Definitional Interpreters". Write translators which parse those interpreters and JIT compile lightning or LLVM IL implementations: both of these support tail-recursion, and will easily interpret Reynold's interpreter IV. Then write a term-rewriting system in that, look at PLT redex to get some ideas of how you can make a system with completely configurable semantics. If you've got the guts, look at System F and proofs and Types, but as soon as you think you're about to go nuts, put it down and write some concrete code. But not too much, just for therapy, you know! Try writing a Reynolds-style interpreter for System F, and compile it into lightning or LLVM, or something else. If that works, try defining lightning as system F primitives of "atomic" type. For parsers, look at Tom Ridge's P3 and P4 parsers: they will be easy to write in System F and Reynold's III/IV and V.

For the interpreters, look at the ML Kit, which, in the guise of smltojs, lets you write browser JavaScript in a really nice typed functional language, and will be fairly easy to coerce into writing neat little JavaScript server apps in ML. I wouldn't bother with SMLServer though, at least while it needs Apache to run.

And while you do that, exchange primes, under many and various schemes, at every available opportunity. Make friends with really weird people and set up networks with them. Don't think of networks as precious. Play around with them, try to break them deliberately, and report only the negative results publicly. We don't need another OpenBSD honeypot. Don't be afraid to set up "spare" networks, and "spoof" networks and redundant duplicates. The more smoke around the sooner the little girtls at the NSA and GCHQ will say "Fuck it, let's just join in." And don't turn them away. You need secure communications with your enemy much more than you need them with your friends. You are not going to start a war with with your friends, and create a new extremist religious state, over a silly little misunderstanding, are you?

Read about X.500 directory server. Directories are vital, and having a good formal description from which you can automatically generate implementations in any language will make the higher-level functions like mail and instant-messaging, and distributed JavaScript RAID disk block loops running on Chrome browsers easier to configure remotely.

The three laws of metarobotics are

- Don't steal.

- Don't lie.

- Don't be lazy.

That's all there is to it.

And it's fun!

viernes, 17 de julio de 2015

Why the Widespread Use of FaceBook is a Serious Problem

FaceBook is a national security problem for every country, including the United States of America.

FaceBook is not social media, because FaceBook users do not actually communicate very much to each other using it. Instead, it is social networking. FaceBook users use FaceBook principally to show each other who are their friends, and by doing this, they show each other (and perhaps more importantly, they show themselves) what sort of person they really are.

The national security problem comes from the fact that the combined FaceBook pages of all the people who work for the government, the military, and all their friends, provide a quite comprehensive picture of the social network of those people. And for anyone who has access to the statistics about real-time use of the system, the amount of "useful" information is mutiplied a hundredfold or more.

I wrote "useful" because the only uses to which such information can be put are in fact misuses or abuses. The national security problem stems from the fact that the FaceBook servers have access to a dynamic view of the social network of the civil service and the armed forces of any country in which FaceBook is widely used. So any agency which has access to the FaceBook servers and the client datastreams can use that data to identify potential targets of surveillance, harrassment, bribery, blackmail, torture or assassination.

For example, an agent A could know who a particular functionary F of a particular government ministry is going to have drinks with this evening, and where and when. Now what if they also know that the functionary is very, very interested in some other person M, but has never had direct contact with her. Now imagine that the agent A has access to a good friend G of that other person M. They instruct the friend G to take the other person M to that place that evening, and thereby introduce the agent A and the third party G to the functionary. Perhaps without the other person M even knowing she was involved, and very probably without raising any suspicions on the part of the functionary F who may well feel it was he who made the contact between the two groups, motivated by his interest in M. The functionary F would not then have the slightest suspicion that his meeting with A had been arranged in any way. The agent A could then proceed to cultivate a relationship with the functionary F and win some confidence, perhaps with a view to later bribery or blackmail.

It's easy to make up more elaborate stories. Perhaps we need someone to write some into a really good tele-novela, or a bestselling thriller, to get people to pay attention to the problem. It is serious.

Now you don't actually need access to the FaceBook servers to do this sort of thing. All you need is a critical mass of "silent FaceBook friends" of employees of the civil service and armed forces and their friends, and the FaceBook rules will allow this network of silent friends compile a database almost as good as the one the FaceBook servers have.

The solution is to develop the transparent, secure communications system I mentioned in the earlier post today.

FaceBook is not social media, because FaceBook users do not actually communicate very much to each other using it. Instead, it is social networking. FaceBook users use FaceBook principally to show each other who are their friends, and by doing this, they show each other (and perhaps more importantly, they show themselves) what sort of person they really are.

The national security problem comes from the fact that the combined FaceBook pages of all the people who work for the government, the military, and all their friends, provide a quite comprehensive picture of the social network of those people. And for anyone who has access to the statistics about real-time use of the system, the amount of "useful" information is mutiplied a hundredfold or more.

I wrote "useful" because the only uses to which such information can be put are in fact misuses or abuses. The national security problem stems from the fact that the FaceBook servers have access to a dynamic view of the social network of the civil service and the armed forces of any country in which FaceBook is widely used. So any agency which has access to the FaceBook servers and the client datastreams can use that data to identify potential targets of surveillance, harrassment, bribery, blackmail, torture or assassination.

For example, an agent A could know who a particular functionary F of a particular government ministry is going to have drinks with this evening, and where and when. Now what if they also know that the functionary is very, very interested in some other person M, but has never had direct contact with her. Now imagine that the agent A has access to a good friend G of that other person M. They instruct the friend G to take the other person M to that place that evening, and thereby introduce the agent A and the third party G to the functionary. Perhaps without the other person M even knowing she was involved, and very probably without raising any suspicions on the part of the functionary F who may well feel it was he who made the contact between the two groups, motivated by his interest in M. The functionary F would not then have the slightest suspicion that his meeting with A had been arranged in any way. The agent A could then proceed to cultivate a relationship with the functionary F and win some confidence, perhaps with a view to later bribery or blackmail.

It's easy to make up more elaborate stories. Perhaps we need someone to write some into a really good tele-novela, or a bestselling thriller, to get people to pay attention to the problem. It is serious.

Now you don't actually need access to the FaceBook servers to do this sort of thing. All you need is a critical mass of "silent FaceBook friends" of employees of the civil service and armed forces and their friends, and the FaceBook rules will allow this network of silent friends compile a database almost as good as the one the FaceBook servers have.

The solution is to develop the transparent, secure communications system I mentioned in the earlier post today.

Cryptographic Checksums Are Not Certificates

A cryptographic checksum is a many-to-one (or surjective) mathematical function from the integers onto some subset of the integers. The integer that is the result of applying this function to the input is also called the checksum of that input.

The idea, such as it is, of a cryptographic checksum, is to provide a certificate of possession of the input number, but without revealing anything about what that input number might be.

Such a certificate, if only it existed, would have a number of uses. For example, it could be used to verify that the data received was indeed exactly the same data that was transmitted over some communications channel. A cryptographic checksum could also be used, along with a cryptographic key, to digitally sign a message, providing a receiver with a proof that the data it contains is exactly that which the signer intended. In these examples, the message, a stream of binary digits, say, is considered to be just one single integer, albeit usually a huge one.

The ideal cryptographic checksum has two properties, which are in fact mutually exclusive. They are called collision resistance and irreversibility.

To be an effective certificate, the cryptographic checksum must provide some degree of collision resistance. That is to say that it must not map one message, and any other "similar" message, to the same checksum. Otherwise a "small" modification of the input could result in the modified message having the same checksum as the original, and then the certificate would not be useful, because it would be ambiguous, certifying that the message was merely one of many possible messages.

Clearly the idea of collision resistance depends crucially on how one defines the terms "similar" and "small" when referred respectively to messages and to the changes made to messages. In particular, collision resistance is always with respect to some specific encoding of the data in the message, in terms of which the words "similar" and "small" must be defined.

The second requirement, that of irreversibility, means that knowledge of the checksum alone cannot be translated into knowlege of "any part" of the input data which it certifies. Irreversibility too is therefore necessarily in respect of some particular encoding, in terms of which the phrase "any part" must be defined.

In general, collision resistance and irreversibility are clearly mutually contradictory requirements, and the only way to satisfy them both is to qualify the two requirements as being "practical". And again, one needs to define precisely what one means by the terms "practically collision resistant" and "practically irreversible".

Consequently, whenever you see an analysis of the effectiveness of any cryptographic checksum, you will be able to recognise the explicit statements concerning the particular class of encodings, and the definitions of "similar message" and "small change", as well as the definitions of "practically collision resistant" and "practically irreversible", all of which will be given with respect to the particular class of encodings under consideration.

The idea, such as it is, of a cryptographic checksum, is to provide a certificate of possession of the input number, but without revealing anything about what that input number might be.

Such a certificate, if only it existed, would have a number of uses. For example, it could be used to verify that the data received was indeed exactly the same data that was transmitted over some communications channel. A cryptographic checksum could also be used, along with a cryptographic key, to digitally sign a message, providing a receiver with a proof that the data it contains is exactly that which the signer intended. In these examples, the message, a stream of binary digits, say, is considered to be just one single integer, albeit usually a huge one.

The ideal cryptographic checksum has two properties, which are in fact mutually exclusive. They are called collision resistance and irreversibility.

To be an effective certificate, the cryptographic checksum must provide some degree of collision resistance. That is to say that it must not map one message, and any other "similar" message, to the same checksum. Otherwise a "small" modification of the input could result in the modified message having the same checksum as the original, and then the certificate would not be useful, because it would be ambiguous, certifying that the message was merely one of many possible messages.

Clearly the idea of collision resistance depends crucially on how one defines the terms "similar" and "small" when referred respectively to messages and to the changes made to messages. In particular, collision resistance is always with respect to some specific encoding of the data in the message, in terms of which the words "similar" and "small" must be defined.

The second requirement, that of irreversibility, means that knowledge of the checksum alone cannot be translated into knowlege of "any part" of the input data which it certifies. Irreversibility too is therefore necessarily in respect of some particular encoding, in terms of which the phrase "any part" must be defined.

In general, collision resistance and irreversibility are clearly mutually contradictory requirements, and the only way to satisfy them both is to qualify the two requirements as being "practical". And again, one needs to define precisely what one means by the terms "practically collision resistant" and "practically irreversible".

Consequently, whenever you see an analysis of the effectiveness of any cryptographic checksum, you will be able to recognise the explicit statements concerning the particular class of encodings, and the definitions of "similar message" and "small change", as well as the definitions of "practically collision resistant" and "practically irreversible", all of which will be given with respect to the particular class of encodings under consideration.

HTTPS is Not Security

This is from http://arstechnica.co.uk/security/2015/06/wikipedia-goes-all-https-starting-immediately/ which quotes the Wikipedia group:

The Internet has a single point of failure, which is the Common Name or CN record, in the DNS. HTTPS relies on the integrity of the DNS to identify the network route to the SSL root certificate servers, but the DNS is not itself secure. The public keys and common names of the root servers are embedded in the executable programs of web browsers and other client programs, and the only authentication of these executables is via cryptographic checksums which are distributed, yes you guessed right, by HTTPS. Therefore SSL root certificate server access is unauthenticated, and so the entire certificate chain is vulnerable.

End of story.

We believe encryption makes the web stronger for everyone. In a world where mass surveillance has become a serious threat to intellectual freedom, secure connections are essential for protecting users around the world. Without encryption, governments can more easily surveil sensitive information, creating a chilling effect, and deterring participation, or in extreme cases they can isolate or discipline citizens.That belief that "encryption makes the web stronger for everyone" is founded on what, exactly? The answer is Nothing whatsoever.

The Internet has a single point of failure, which is the Common Name or CN record, in the DNS. HTTPS relies on the integrity of the DNS to identify the network route to the SSL root certificate servers, but the DNS is not itself secure. The public keys and common names of the root servers are embedded in the executable programs of web browsers and other client programs, and the only authentication of these executables is via cryptographic checksums which are distributed, yes you guessed right, by HTTPS. Therefore SSL root certificate server access is unauthenticated, and so the entire certificate chain is vulnerable.

End of story.

Reconciling The People's Rights to Privacy with National Security

According to Alan Davidson, former Google executive turned Commerce Department official, strong encryption and law enforcement interests are not “irreconcilable.”That comes from https://firstlook.org/theintercept/2015/07/15/former-google-exec-turned-obama-official-wont-say-believes-magical-solution-encryption-debate/ and the next sentence is:

But he won’t speculate as to how that’s possible.Well I can. It's not at all difficult to think up at least one reasonable way to do it. I attribute the idea to Aaron Schwartz, who said before he died that he wanted his entire e-mail archive to be made publicly available.

We can reconcile the requirements of privacy, security and transparency simply by making one single global secure Internet, using the strongest cryptography possible, available to every and all people of all nations. And we build that system in such a way that security and integrity checks apply not only to the data that is being stored and communicated, but to the records of access and update of that data.

I have no objection whatsoever to the Government of the Plurinational State of Bolivia, or those of the Russian Federation, or the United States or the UK, reading any of my communications, provided they are prepared to tell me truthfully for exactly what purpose they require that information, and provided that they can prove that this purpose is in the interests of the common good of all of Humanity for all time.

Now the problem with this idea, I suspect, is that the only government in the world that is potentially capable of providing such a proof is that of the Plurinational State of Bolivia.

I say potentially capable, because it is not, as far as I know, actually capable of doing this, yet. The problem is that we do not have any reason to believe that any computer or communications system in use in Bolivia is in fact secure. So when the government of the Plurinational State of Bolivia accesses my data, though they may be able to demonstrate that their intention is for the common good, they will not be able to demonstrate that they are the only ones accessing that data, and that it will not be accessed by other unknown parties whose interests are not those of the common good.

Of course the same objection holds for any government of any nation. So the problem we all have to solve, and solve urgently and effectively, is how to provide permanent access to verifiably secure communications and computation to every person on earth.

jueves, 16 de julio de 2015

Final Cause in Telecommunications

Here's an amusing example of final cause. In 2014, in his e-mail signature, Richard Stallman objects to Skype because it's non-free (freedom denying!) software. He says "use a telephone call" instead.

Then 29 years earlier, Edsger W. Dijkstra points out that Stallman is functionally illiterate.

That's how the future reaches back to affect the past.

--

Dr Richard Stallman

President, Free Software Foundation

51 Franklin St

Boston MA 02110

USA

www.fsf.org www.gnu.org

Skype: No way! That's nonfree (freedom-denying) software.

Use Ekiga or an ordinary phone call.

Then 29 years earlier, Edsger W. Dijkstra points out that Stallman is functionally illiterate.

Two comments to this question. One comment is that your view of industrial programs as pointed out in the question is narrow. There are all sorts of programs that hardly have users, if you think of a telephone exchange, or digital controls in cars or airplanes. As to the programming products that are used by people, I hardly have first hand experience, my impression is that an enormous amount of user time is wasted figuring out what the system does and how to control it, which is the consequence of two sorts of happenings. First of all that the designers have failed to keep the interface of a system as simple as possible—which is a challenge; but as soon as you realize that the main challenge of computer science is how not to get lost in the complexities of their own making, it is quite clear that this is a major task. Secondly, the scene is very much burdened by the fact that a large fraction of the people involved are functionally illiterate; particularly in the United States.Now that's obvious to us now, but perhaps it wouldn't have been if Ed hadn't pointed it out so bluntly.

That's how the future reaches back to affect the past.

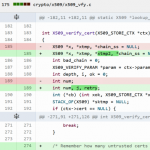

The Mother of all Software Vulnerabilities

In August last year I wrote a document describing how we could secure the GNU toolchain from possible subversion, as well as make all the software a lot better,

I sent it first by e-mail to Richard Stallman, Linus Torvalds and Theo deRaadt, amongst others. Then when I received no meaningful response from any of these people, I sent it to the public guile-devel mailing list.

Stallman's response (by private e-mail to me) was rather feeble. He did not seem to understand the security problem. So I explained it more explicitly in another post to guile-devel, hoping to widen the discussion to include people who might actually provide better-considered responses to the document.

That didn't work either, so I tried to explain it yet more clearly in a blog post, where I pointed out the original publication of the problem often mis-attributed to Ken Thompson.

To my pleasant surprise Roger Schell responded shortly afterwards, referring us to a paper he had written a decade earlier, which corroborated everything I had claimed.

To make the point, I gave some details to show how easy it would be to devise an object code trap-door that would survive all but a major restructuring of the compiler source code.

Stallman's feeble response was that he did not have time to read the 100 or so lines of program code I had given which implemented a PROLOG interpreter which could search for the relevant patterns in the compiler source to identify the target source even when it's structure was altered from version to version. I responded in private that he seemed to think I had time to read over 60MB [correction: that should read 600MB] of source code that comprises the GCC compiler source distribution. He had nothing to say in response to that.

It didn't go much further than that. There was a lot of smoke on the guile-devel list from people who thought they knew what I was saying, but who made it very clear that they had actually missed the point entirely. But clearly some people did understand exactly what I was saying.

I also explained how we can solve the problem of harware subversion using similar techniques.

Then a few months later, Edward Snowden dug around his "archives" and came up with this lovely little snippet, describing how "researchers" at Sandia National Labs. had actually done exactly this, to hack Apple's XCode development toolchain, and this was the origin of the MacOS and iOS hacks described here.

It is clear then that subversion by object code trap-doors is a real, extant threat, and so probably is subversion by system initialization trap-doors, though there are very few people capable of understanding what that might be. Given the difficulty of detection of these things, we have no good reason to believe that any toolchain based on the GNU C compiler has not been effectively subverted. The same "whacking" that the little boys at Sandia gave the Apple XCode gcc compiler, will be an easy-enough port to OpenBSD, Debian Linux etc.

So I pointed out the problem, and it was subsequently confirmed to be a real extant exploit. But I also pointed out the solution, which is to formally specify programs using intensional semantics as application-specific languages, and then automate the actual generation of programs implementing those specifications.

Now there is a bit of a hoo-hah about unreliable insecure commercial and open-source software, and I wonder why no-one still has any response to my suggestions? What is the problem? Can anyone tell me what I've missed? Is there a big metaprogramming project that will soon solve all this?

I sent it first by e-mail to Richard Stallman, Linus Torvalds and Theo deRaadt, amongst others. Then when I received no meaningful response from any of these people, I sent it to the public guile-devel mailing list.

Stallman's response (by private e-mail to me) was rather feeble. He did not seem to understand the security problem. So I explained it more explicitly in another post to guile-devel, hoping to widen the discussion to include people who might actually provide better-considered responses to the document.

That didn't work either, so I tried to explain it yet more clearly in a blog post, where I pointed out the original publication of the problem often mis-attributed to Ken Thompson.

To my pleasant surprise Roger Schell responded shortly afterwards, referring us to a paper he had written a decade earlier, which corroborated everything I had claimed.

To make the point, I gave some details to show how easy it would be to devise an object code trap-door that would survive all but a major restructuring of the compiler source code.

Stallman's feeble response was that he did not have time to read the 100 or so lines of program code I had given which implemented a PROLOG interpreter which could search for the relevant patterns in the compiler source to identify the target source even when it's structure was altered from version to version. I responded in private that he seemed to think I had time to read over 60MB [correction: that should read 600MB] of source code that comprises the GCC compiler source distribution. He had nothing to say in response to that.

It didn't go much further than that. There was a lot of smoke on the guile-devel list from people who thought they knew what I was saying, but who made it very clear that they had actually missed the point entirely. But clearly some people did understand exactly what I was saying.

I also explained how we can solve the problem of harware subversion using similar techniques.

It is clear then that subversion by object code trap-doors is a real, extant threat, and so probably is subversion by system initialization trap-doors, though there are very few people capable of understanding what that might be. Given the difficulty of detection of these things, we have no good reason to believe that any toolchain based on the GNU C compiler has not been effectively subverted. The same "whacking" that the little boys at Sandia gave the Apple XCode gcc compiler, will be an easy-enough port to OpenBSD, Debian Linux etc.

So I pointed out the problem, and it was subsequently confirmed to be a real extant exploit. But I also pointed out the solution, which is to formally specify programs using intensional semantics as application-specific languages, and then automate the actual generation of programs implementing those specifications.

Now there is a bit of a hoo-hah about unreliable insecure commercial and open-source software, and I wonder why no-one still has any response to my suggestions? What is the problem? Can anyone tell me what I've missed? Is there a big metaprogramming project that will soon solve all this?

miércoles, 15 de julio de 2015

Modern Economics

Here's a little riddle for the Freemasons. You'll need to learn some Geometry before you can solve this one:

You are two little men, and one of you is sucking the other's cock while he fucks you up the arse. Where is your head?

Now for the 33 degree Freemasons, here's the really hard version for which you will need to learn about both Geometry and Arithmetic (modulo n):

You are any pair (i and i+1, say) of n little men, and one of you is sucking the other's cock while he fucks you up the arse. Which of you has the most money?

Now for the 108 degree Freemasons, you will need to know Geometry, Arithmetic, Stereometry and also what a Galois Connection is.

You are one of n little men, arranged on the surface of the earth, and you are sucking one of the other's cock while you fuck another up the arse. Is the total amount of Global Wealth increasing or is it decreasing?

You are two little men, and one of you is sucking the other's cock while he fucks you up the arse. Where is your head?

Now for the 33 degree Freemasons, here's the really hard version for which you will need to learn about both Geometry and Arithmetic (modulo n):

You are any pair (i and i+1, say) of n little men, and one of you is sucking the other's cock while he fucks you up the arse. Which of you has the most money?

Now for the 108 degree Freemasons, you will need to know Geometry, Arithmetic, Stereometry and also what a Galois Connection is.

You are one of n little men, arranged on the surface of the earth, and you are sucking one of the other's cock while you fuck another up the arse. Is the total amount of Global Wealth increasing or is it decreasing?

martes, 14 de julio de 2015

Software is Not Even a Load of Utter Crap

Below is how the Ars Technica UK Security page looks like today. The verdict is pretty clear. Software isn't an eco-system. The ecosystem is a product of Intelligent Design, and modern software is only a junk-yard full of useless garbage that won't even rot properly. Any kind of shit at all, is worth more than modern software is worth.

Now the stupidest thing to do would be to start writing the same useless crap all over again. Instead, we need to design application-specific languages and interpreters for those languages. Then instead of boring ourselves stupid by writing a load of broken code by hand, we write the interpreters which will write the code implementing the specifications we write in those application-specific languages. Then whenever we find that we've done yet something else incredibly fucking stupid, we just change the interpreters, and regenerate all the applications, thereby fixing all the fuck-witted bugs in all the applications, all at one fell swoop.

Adobe flash isn't anything more than an horrendously badly written interpreter for an horrendously badly designed language that in fact hardly does anything at all. So if we want to replace it we just design a decent abstraction of a few different types of processor, and a few application languages: for example, one language for specifying vector graphics, another for raster graphics and applying filters and whatever, and another for scheduling animated graphics and audio streams, another for processing real-time user input events and another for remote procedure calls over network connections.

Then we design a language for specifying raster graphic constructions at around the level at which the pixman primitives work, and another for specifying drawing at the level of bezier curves, fills, etc., around the level at which Cairo works. Then we design a language for specifying glyphs in fonts using those primitives, and we produce a FEW really good fonts by interpreting languages that were designed to specify those particular fonts. Then we define a language for specifying the abstract topology of the characters, and interpret those glyph programs into the font-specific languages, which we in turn interpret into the language for specifying raster graphics primitives. Then when someone defines a Greek capital Sigma, they just write the description of the topology and the Sigma appears in Arial, Times New Roman and Garamond No 8, all at the same time, and perfectly matching all those fonts.

Then we can interpret those raster graphics primitives as generic abstract assembler code, and then reinterpret the results as specific assembler, which we JIT compile to some specific processor model and graphics display, both of which are also formally specified using languages designed for exactly that purpose. Then when we flash that JIT compiler into the machine's BIOS ROM area, and it boots in 0.5 seconds, and functions perfectly, and only uses around 2MB of code space, and even if the machine is a 10 year old laptop wired to an old car battery, for 90% of what most people would want to do with it, it is just as fast as a brand new machine costing $2,000. And the application is not just a Flash browser, it does far more than anything anyone could ever imagine wanting to use that computer to do.

That's all you need to know, now go to it ladies. I'm sorry I can't be more help, but I told you all this a year ago, and my reward was that I got left to starve in the streets of La Paz in winter. I rarely eat more than one meal a day, and often none at all, and my health is deteriorating rapidly.

So if you need me to help you, then some of you are going to have to lift yourselves out of your self-indulgent torpor and work out how you can help me to help you. Otherwise I am going to die, and quite frankly I am looking forward to it because the less time I spend in this stupid world, run by idots and madmen, the better. If you don't want to do what I tell you you need to do to solve these silly little problems, and also you can't tell me why it is that you know that you know better than I do, then I don't want to hear anything from you at all, I just want out.

So, is there anyone, anyone at all in this whole wide world, that can come up with an intelligent response to this? Oh go on, please, please, suprise me, I've earned it!

Ian

SPECULATION RUN AMOK

Now the stupidest thing to do would be to start writing the same useless crap all over again. Instead, we need to design application-specific languages and interpreters for those languages. Then instead of boring ourselves stupid by writing a load of broken code by hand, we write the interpreters which will write the code implementing the specifications we write in those application-specific languages. Then whenever we find that we've done yet something else incredibly fucking stupid, we just change the interpreters, and regenerate all the applications, thereby fixing all the fuck-witted bugs in all the applications, all at one fell swoop.

Adobe flash isn't anything more than an horrendously badly written interpreter for an horrendously badly designed language that in fact hardly does anything at all. So if we want to replace it we just design a decent abstraction of a few different types of processor, and a few application languages: for example, one language for specifying vector graphics, another for raster graphics and applying filters and whatever, and another for scheduling animated graphics and audio streams, another for processing real-time user input events and another for remote procedure calls over network connections.

Then we design a language for specifying raster graphic constructions at around the level at which the pixman primitives work, and another for specifying drawing at the level of bezier curves, fills, etc., around the level at which Cairo works. Then we design a language for specifying glyphs in fonts using those primitives, and we produce a FEW really good fonts by interpreting languages that were designed to specify those particular fonts. Then we define a language for specifying the abstract topology of the characters, and interpret those glyph programs into the font-specific languages, which we in turn interpret into the language for specifying raster graphics primitives. Then when someone defines a Greek capital Sigma, they just write the description of the topology and the Sigma appears in Arial, Times New Roman and Garamond No 8, all at the same time, and perfectly matching all those fonts.

Then we can interpret those raster graphics primitives as generic abstract assembler code, and then reinterpret the results as specific assembler, which we JIT compile to some specific processor model and graphics display, both of which are also formally specified using languages designed for exactly that purpose. Then when we flash that JIT compiler into the machine's BIOS ROM area, and it boots in 0.5 seconds, and functions perfectly, and only uses around 2MB of code space, and even if the machine is a 10 year old laptop wired to an old car battery, for 90% of what most people would want to do with it, it is just as fast as a brand new machine costing $2,000. And the application is not just a Flash browser, it does far more than anything anyone could ever imagine wanting to use that computer to do.

That's all you need to know, now go to it ladies. I'm sorry I can't be more help, but I told you all this a year ago, and my reward was that I got left to starve in the streets of La Paz in winter. I rarely eat more than one meal a day, and often none at all, and my health is deteriorating rapidly.

So if you need me to help you, then some of you are going to have to lift yourselves out of your self-indulgent torpor and work out how you can help me to help you. Otherwise I am going to die, and quite frankly I am looking forward to it because the less time I spend in this stupid world, run by idots and madmen, the better. If you don't want to do what I tell you you need to do to solve these silly little problems, and also you can't tell me why it is that you know that you know better than I do, then I don't want to hear anything from you at all, I just want out.

So, is there anyone, anyone at all in this whole wide world, that can come up with an intelligent response to this? Oh go on, please, please, suprise me, I've earned it!

Ian

SPECULATION RUN AMOK

- Attack code has already been published, all but assuring exploits will go wild.by Dan Goodin - Jul 14, 2015 11:32am AST

Hacking Team broke Bitcoin secrecy by targeting crucial wallet file

Leaked e-mails brag HT could see "who got that money (DEA: anyone interested? :P )"by Cyrus Farivar - Jul 14, 2015 9:55am ASTFLASH, AH AHH. NOT THE SAVIOUR OF THE UNIVERSE.

Firefox blacklists Flash player due to unpatched 0-day vulnerabilities

Also, Facebook calls for Flash end-of-life, so that we can "upgrade the whole ecosystem."by Sebastian Anthony - Jul 14, 2015 6:52am ASTDATA DOCTOR

UK startup Privitar wants to protect your data against big database hacks

"A global bank and a large telco provider" are already on board.by Mark Walton - Jul 14, 2015 5:29am AST"RELY ON US?"

Hacking Team touts new spyware suite, calls leaks now “obsolete”

"This is a total replacement for the existing ‘Galileo’ system, not simply an update."by Cyrus Farivar - Jul 14, 2015 3:32am ASTPWNAGEDDON!

Java and Flash both vulnerable—again—to new 0-day attacks

Java bug is actively exploited. Flash flaws will likely be targeted soon.by Dan Goodin - Jul 13, 2015 12:11pm AST

Hacking Team orchestrated brazen BGP hack to hijack IPs it didn’t own

Hijacking was initiated after Italian Police lost control of infected machines.by Dan Goodin - Jul 13, 2015 9:45am ASTSTAFF BLOG

OPM got hacked and all I got was this stupid e-mail

I'm mad as hell and want to see some accountability for once.by Jonathan M. Gitlin - Jul 12, 2015 4:35am AST

Hacking Team’s Flash 0-day: Potent enough to infect actual Chrome user

Government-grade attack code, including Windows exploit, now available to anyone.by Dan Goodin - Jul 11, 2015 4:30am AST

How a Russian hacker made £30,000 selling a zero-day Flash exploit to Hacking Team

"Volume discounts are possible if you take several bugs."by Cyrus Farivar - Jul 10, 2015 12:13pm AST

Critical OpenSSL bug allows attackers to impersonate any trusted server

Fortunately, most apps not affected by flaw giving attacker CA powers.by Dan Goodin - Jul 9, 2015 11:53am AST"I FEEL FINE"

Simultaneous downing of NY Stock Exchange, United, and WSJ.com rattles nerves

No, the outages weren't part of some cyber attack, White House officials say.by Dan Goodin - Jul 9, 2015 8:25am AST

Despite Hacking Team’s poor opsec, CEO came from early days of PGP

But by 2015, David Vincenzetti was "sceptical about encrypted" e-mail with clients.by Cyrus Farivar - Jul 8, 2015 3:35pm ASTTOLD YA SO

Adobe Flash exploit that was leaked by Hacking Team goes wild; patch now!

Hours after the 0day was found, it was added to popular exploit kits.by Dan Goodin - Jul 8, 2015 11:32am AST

Meet the hackers who break into Microsoft and Apple to steal insider info

Almost 50 companies have been hacked by a shadowy group.by Dan Goodin - Jul 8, 2015 10:41am AST

Days after Hacking Team breach, nobody fired, no customers lost

Eric Rabe: "The company is certainly in operation, we have a lot of work to do."by Cyrus Farivar - Jul 8, 2015 6:05am AST

Hacking Team leak releases potent Flash 0day into the wild

Windows and Android phones may be affected by other leaked exploits.by Dan Goodin - Jul 7, 2015 2:34pm AST

Massive leak reveals Hacking Team’s most private moments in messy detail

Imagine "explaining the evilest technology on earth," company CEO joked last month.by Dan Goodin - Jul 7, 2015 2:49am AST

Hacking Team gets hacked, invoices suggest spyware sold to repressive governments

Invoices purport to show Hacking Team doing business in Sudan and other rogue nations.by Dan Goodin - Jul 6, 2015 12:48pm AST

viernes, 10 de julio de 2015

Insecurity Sells

Here's a nice story.

It's a pity someone so intelligent has to spend all their time fishing around the gutter to earn a living. He would be much better employed designing software production systems that solved all these kinds of problems. But there are any number of learned idiots around who will say "it's an imponderable" or that "people need the intermediate level representations". They're wrong. Computer programs can write better programs than any number of people could, no matter where they got their PhDs.

http://arstechnica.co.uk/security/2015/07/how-a-russian-hacker-made-45000-selling-a-zero-day-flash-exploit-to-hacking-team/I have a lot of respect for Vitaliy Toropov. He's professional, and very good at his job. He clearly doesn't care too much for money either, because there are probably plenty of even less savoury organizations (run by much smarter people, too) who would have been happy to pay 5 times what he was asking for that exploit.

It's a pity someone so intelligent has to spend all their time fishing around the gutter to earn a living. He would be much better employed designing software production systems that solved all these kinds of problems. But there are any number of learned idiots around who will say "it's an imponderable" or that "people need the intermediate level representations". They're wrong. Computer programs can write better programs than any number of people could, no matter where they got their PhDs.

miércoles, 8 de julio de 2015

Automatic Translation

Current attempts to automatically translate texts are doomed to failure for the simple reason that language evolves. Therefore the semantics, in other words the meaning of a text, depends crucially on the context in which that text appears, but the context is not evident in the text itself. Chomsky provided a famous example of the phenomenon in the sentence

The problem is that the text is an extensional representation of some intensional semantics. The intensional semantics are the semantics the author intended the text to represent. Now there are any number of ways the author (or poet) could have chosen to express that intensional meaning, and this is but one of them. However, the intensional meaning is not explicit in any one of those extensional texts.

Rather than banging our heads against the proverbial brick wall of translating informally written texts from one language to another, we could make some real progress by concentrating our efforts on defining formal languages to express the intensional semantics of what we wish to say. Then we could interpret those formal expressions of semantics as if they were computer programs, and thereby automatically generate the extensional expressions in any context where they are needed. That context will include not only the language in which they are written, but the particular period and style of expression.

Such formal expressions of intensional semantics could in principle be translated perfectly into any known written language. Furthermore, those translations could be formally proved to be faithful representations of the intended meaning of the texts.

Colourless green ideas sleep furiously.Chomsky probably meant this as an example of a grammatically correct English sentence which was not in fact meaningful. But the context is everything, and it doesn't take much of a poet to imagine a context in which the above sentence is in fact meanigful; try it.

The problem is that the text is an extensional representation of some intensional semantics. The intensional semantics are the semantics the author intended the text to represent. Now there are any number of ways the author (or poet) could have chosen to express that intensional meaning, and this is but one of them. However, the intensional meaning is not explicit in any one of those extensional texts.

Rather than banging our heads against the proverbial brick wall of translating informally written texts from one language to another, we could make some real progress by concentrating our efforts on defining formal languages to express the intensional semantics of what we wish to say. Then we could interpret those formal expressions of semantics as if they were computer programs, and thereby automatically generate the extensional expressions in any context where they are needed. That context will include not only the language in which they are written, but the particular period and style of expression.

Such formal expressions of intensional semantics could in principle be translated perfectly into any known written language. Furthermore, those translations could be formally proved to be faithful representations of the intended meaning of the texts.

Suscribirse a:

Entradas (Atom)